Better AI Starts with Verifiable Data via The Sui Stack

The future of AI isn’t just about smarter models; it’s about verifiable data, and the Sui Stack is building the trust layer that makes it possible.

AI adoption is moving fast, but its foundations haven’t caught up.

Behind every model, every chatbot, and every agent lies one simple dependency: data.

And as the saying goes, garbage in, garbage out. If the data feeding AI systems is incomplete, tampered with, or unverifiable, no amount of model fine-tuning can make the outputs truly reliable. Most of today’s data pipelines are exactly that: opaque, mutable, and impossible to audit once AI models consume them.

That lack of visibility doesn’t just create compliance risk, it leads to worse outcomes for users. Think chatbots confidently citing wrong facts, financial models making predictions without transparency, or healthcare AI trained on unverified inputs. When no one can prove where data came from or how it’s been changed, trust becomes a bottleneck, and product quality erodes.

It’s time to fix that.

From raw data to verifiable intelligence

The next generation of AI infrastructure won’t just be about bigger GPUs or faster APIs, it’ll also be about verifiable data flows.

That’s where the Sui Stack comes in: a composable foundation that uniquely empowers AI systems with verifiability, privacy, and control at every step of the AI lifecycle.

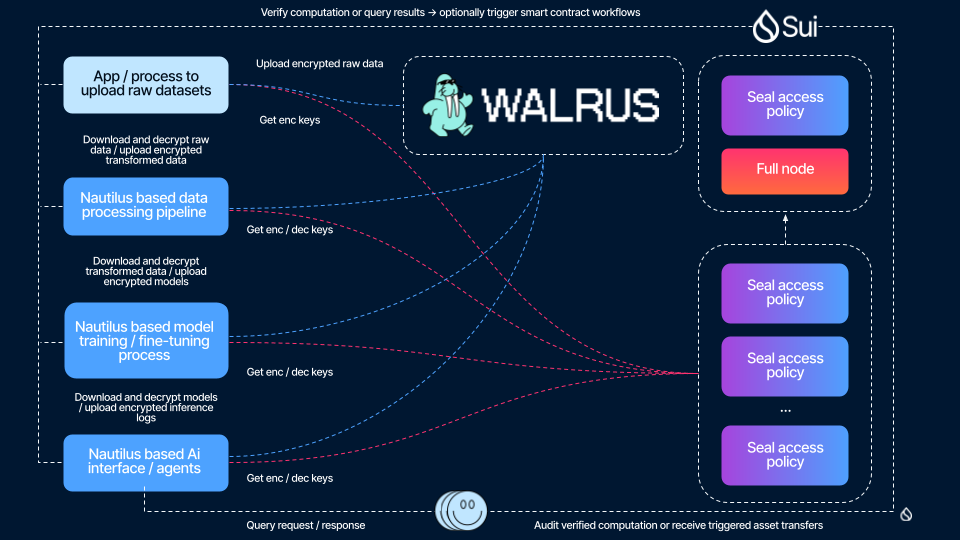

Think of it as the trust layer for intelligent systems. Each component has a clear role:

- Walrus: a developer platform enabling data markets for the AI era. It makes data across industries trustworthy, provable, monetizable, and secure.

- Seal: programmable encryption and access control. Define who can decrypt what, for how long, and under which rules.

- Nautilus: confidential and verifiable computing. Run model inference and AI agents inside trusted enclaves that produce cryptographic proof of correctness.

- Sui: the coordination and provenance layer that ties it all together onchain, enforcing access rules and logging verifiable receipts.

Together, they form the Sui Stack, a new way to build AI systems that respects autonomy, data rights, and transparency.

Why this matters for builders

For developers building AI systems, especially those handling sensitive or high-value data, today’s infrastructure often falls short. Provenance is hard to establish, access control is clunky, and the compute is effectively a black box. Data moves without a verifiable trail, and no one can tell whether it’s been altered along the way.

This uncertainty slows innovation and erodes trust. To stay in control, teams often over-centralize their data in cloud silos, or, in the name of collaboration, over-share it across systems they can’t fully govern. Even when agentic workflows appear to run correctly, there’s still no cryptographic proof that they did so as intended.

The Sui Stack removes that friction. Together, Walrus, Seal, Nautilus, and Sui give builders the ability to store, gate, and compute with verifiable guarantees. Developers can start small: encrypt a dataset and enforce an access rule, or add verifiable proof to a model training job or agentic inference runs. From there, they can scale toward a composable, fully auditable data pipeline where trust is built in by design.

Why this matters for end users

End users need to have confidence in the answers, recommendations, and outcomes AI systems provide. When models are trained on unverifiable or incomplete datasets, the results can be misleading.

When data pipelines are unverifiable, users often pay the price in subtle but serious ways:

- Unreliable answers: Chatbots or copilots that sound confident but are trained on incomplete or unverified data can mislead users, including sometimes in high-stakes situations.

- Hidden bias: When the source of training data is opaque, it’s impossible to detect or correct systemic bias, leading to unfair or unsafe decisions.

- Lack of accountability: If an AI-generated decision affects someone, like in cases of a loan approval, a medical diagnosis, or a recommendation, trust can collapse if no one can trace how that decision was made.

A verifiable stack changes that dynamic.

When models are trained and run on provable, policy-enforced, and auditable data, users get more consistent answers, clearer explanations, and a stronger basis for trust. It’s the difference between “the model says so” and “here’s why you can trust what it says.”

What this looks like in practice

Across the Sui and Walrus ecosystems, builders are already putting these ideas to work in ways that showcase the impact of verifiable data.

Some are building private inference and agentic workflows, where models are hosted on Walrus, encrypted with Seal, and accessed only through Nautilus enclaves under strict, programmable permissions, such as token gating. Others are deploying secure analytics environments for enterprises, turning every query and embedding lookup into an auditable event that strengthens accountability.

Through collaborative data rooms, separate teams can share encrypted data, run computations inside Nautilus enclaves, with participants receiving verifiable receipts showing what data was used and how. Meanwhile, AI marketplaces are emerging where builders can register datasets, models, or agents, define their own licensing terms, and let AI systems access them directly.

Each of these use cases shares the same foundation: verifiable data, programmable access, and trustworthy compute brought together through the Sui Stack.

Walrus: the data backbone of the AI era

At the center of this vision sits Walrus, the developer platform for the data economy. Walrus isn’t just decentralized storage, it anchors trust in the world’s data. Every file and model on Walrus carries a verifiable ID, every update is tracked & provable, and every dataset can be licensed or monetized securely.

From AI agents pulling real-time data to enterprises building auditable AI pipelines, Walrus makes data a first-class citizen in the AI stack, not an afterthought.

What’s next

This is just the beginning. Over the coming weeks, we’ll explore how builders are already using these primitives in new ways: wrapping model training & inference pipelines with proofs and policies, creating programmatic licensing for premium data & AI models, and enabling intelligent systems to transact through verifiable, privacy-preserving payments.

Each of those builds on the same foundation: verifiable data.

Because the future of AI won’t just be smart, it’ll be provable.